Francesca Morris

"(in).visible bodies"

Keywords: archival practice, body, collage, documentation, feminist methodologies, social media

Keywords: censorship, hashtag, instagram, bodies, women

censorship /ˈsɛnsəʃɪp/ noun

- the suppression or prohibition of any parts of books, films, news, etc. that are considered obscene, politically unacceptable, or a threat to security. 1

(in).visible bodies began as an exploration into the process of the unintended compilation of information, images, and data on the social media platform Instagram. On the surface this unintentional compilation occurs as a result of the user’s action to assign hashtags, through the use of the hash symbol, to the uploaded images. Behind the user interface the hash symbol can be found within the code that is the Instagram algorithm. This code screens the uploaded images, adds alternate text to the images, and then compiles the images in a particular order.

On either side of the interface the function of the hash symbol is diametrically opposed. The purpose of the user’s assigned hashtag is to reveal the underlying topic of the photograph and group it to photographs of the same topic. Whilst the purpose of the hash symbol within code is to conceal nonfunctional comments between programmers that are included within the code.

The concealing nature of the hash symbol was extrapolated to Instagram’s discriminatory censorship and subsequent concealment of female bodies. (in).visible bodies is an ongoing collaboration with an anonymous artist whose self-expressive portraits have previously been censored on Instagram.

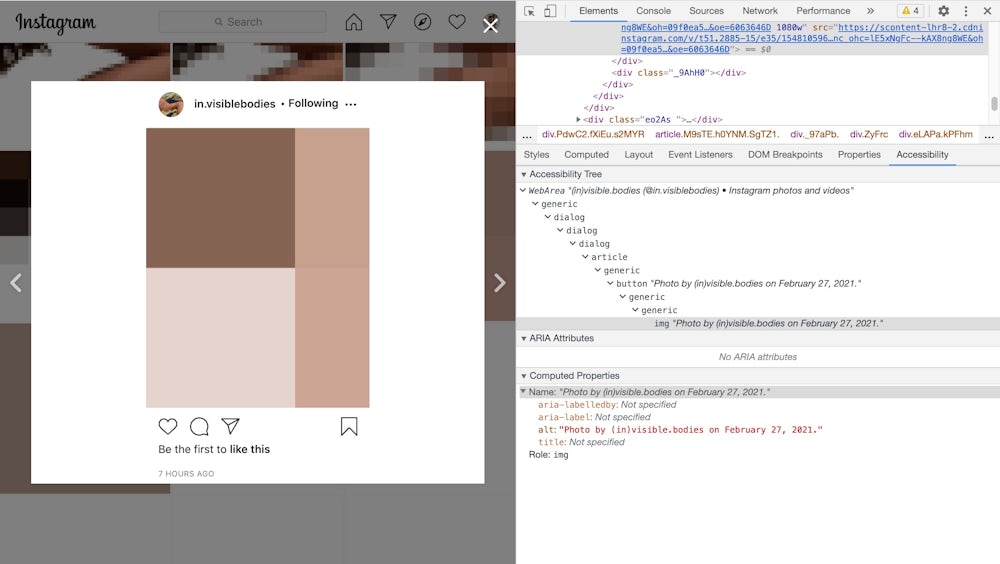

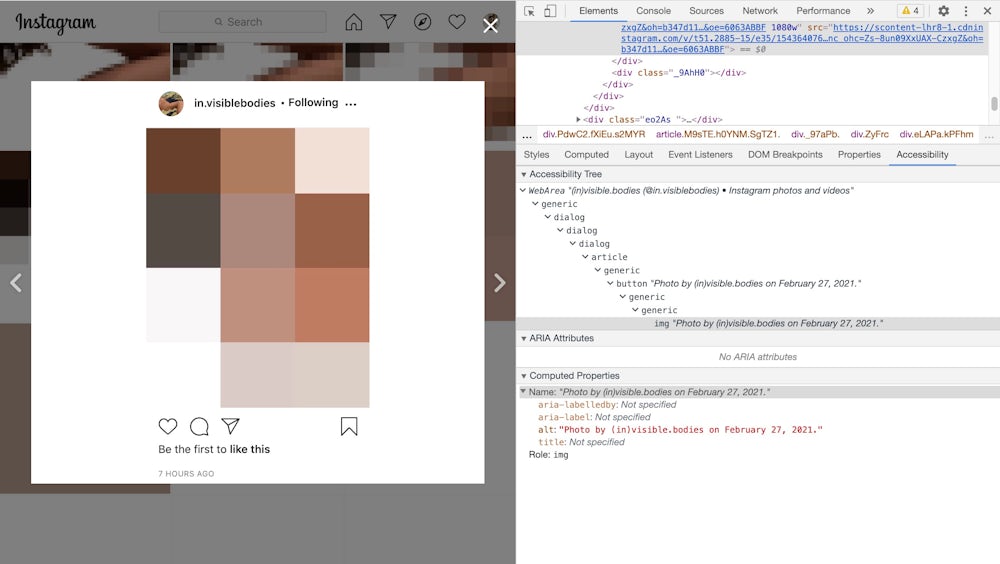

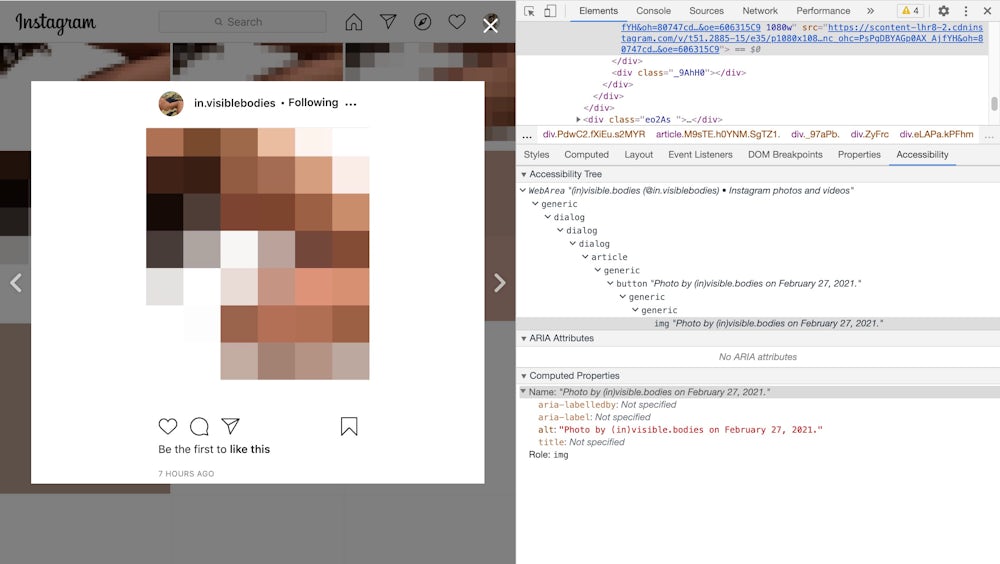

This project takes the artist’s censored portraits and alters them to varying extents through pixelation, crosshatch, and collage. The portraits are then reuploaded to the Instagram account @in.visiblebodies to test if the screening process still believes that the post goes against Instagram’s Community Guidelines 2. These experiments are then shifted from the digital to the physical through the compilation of the altered photographs, screenshots of Instagram’s warnings, and the output alternate text provided by the algorithm that screens the image. These experiments have been compiled in the physical form of a zine where the artworks can no longer be censored.

The key intervention of the project is the pixelation of the artist’s censored portraits. This process highlights the historic and current use of pixelation in the media as a method to obscure nudity in an image. In this situation the entire photograph is pixelated rather than just the component where nudity is present in an attempt to question the arbitrary morality imposed by Instagram itself. The video aims to document the compilation of the artist’s pixelated portraits that were previously censored on the Instagram platform. By exponentially decreasing the degree of pixelation of the portraits the resolution at which the Instagram algorithm can “see” is tested. It is assumed that the resolution at which the portrait is censored is also the resolution at which it becomes visible to the Instagram algorithm.

‘Censorship, n.’, OED Online (Oxford University Press) https://www.oed.com/view/Entry/29607 [accessed 6 January 2021]. ↩

‘Instagram Community Guidelines FAQs’ https://about.instagram.com/blog/announcements/instagram-community-guidelines-faqs [accessed 23 February 2021]. ↩